Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

Foundationally, the OWASP Top 10 for Large Language Model (LLMs) applications was designed to educate software developers, security architects, and other hands-on practitioners about how to implement more secure AI workloads.

The framework specifies the potential security risks associated with deploying and managing LLM applications by explicitly naming the most critical vulnerabilities observed in LLMs to date and how to mitigate them. There are a plethora of resources on the web that document the need for and benefits of an open-source risk management project like OWASP Top 10 for LLMs. In fact, the project has now grown into the comprehensive OWASP GenAI Security Project – a global initiative that encompasses multiple security initiatives beyond just the Top 10 list.

Many practitioners struggle to discern how cross-functional teams can align to better manage the rollout of Generative AI (GenAI) technologies within their organizations. There’s a pressing need in the market for comprehensive security controls to facilitate the secure deployment of GenAI workloads. In addition, there’s a need for education of CISOs to better understand how these projects can help and what the unique differences are between OWASP Top 10 for LLMs and other industry threat mapping frameworks, such as MITRE ATT&CK and MITRE ATLAS.

Understanding the differences between AI, ML, & LLMs

Artificial Intelligence (AI) has undergone monumental growth over the past few decades. If we go back as far as 1951, a year after Isaac Asimov published his science fiction concept, "Three Laws of Robotics," the first AI program was written by Christopher Strachey to play checkers (or draughts, as it's known in the UK).

Where AI is just a broad term that encompasses all fields of computer science, allowing machines to accomplish tasks similar to human behavior, Machine Learning (ML) and GenAI are two clearly defined subcategories of AI.

ML was not replaced by GenAI. ML algorithms tend to be trained on a set of data, learn from that data, and are often used to make predictions. These statistical models can be used to predict the weather or detect anomalous behavior. They remain a key part of our financial/banking systems and are used regularly in cybersecurity to detect unwanted behavior.

GenAI, on the other hand, has its own unique set of use cases. You can think of it as a type of ML that creates new data. GenAI often utilizes LLMs to synthesize existing data and leverage it to create something new. Examples include services like ChatGPT and Sysdig Sage™. As the AI ecosystem rapidly evolves, organizations are increasingly deploying GenAI solutions — such as Llama 2, Midjourney, and ElevenLabs — into their cloud-native and Kubernetes environments to leverage the benefits of high scalability and seamless orchestration in the cloud.

This shift is accelerating the need for robust, cloud-native security frameworks that can safeguard AI workloads. In this context, the distinctions between AI, machine learning (ML), and LLMs are critical to understanding the security implications and the governance models required to manage them effectively.

OWASP Top 10 and Kubernetes

As businesses integrate tools like Llama into cloud-native environments, they often rely on platforms like Kubernetes to manage these AI workloads efficiently. This transition to cloud-native infrastructure introduces a new layer of complexity, as highlighted in the OWASP Top 10 for Kubernetes and the broader OWASP Top 10 for Cloud-Native systems guidance.

The flexibility and scalability offered by Kubernetes make it easier to deploy and scale GenAI models, but these models also introduce a whole new attack surface to your organization — that's where security leadership needs to heed the warning! A containerized AI model running on a cloud platform is subject to a significantly different set of security concerns than a traditional on-premises deployment or other cloud-native, containerized environments, underscoring the need for comprehensive security tooling to provide proper visibility into the risks associated with this rapid adoption of AI.

Who is responsible for trustworthy AI?

GenAI advancements and benefits will continue in the years ahead, and with each innovation, new security challenges will arise. A trustworthy AI will need to be reliable, resilient, and responsible for securing both internal data and sensitive customer data.

From a regulatory perspective, the EU AI Act is the first comprehensive AI law. It came into force in 2025. Since the EU's General Data Protection Regulation (GDPR) was not specifically designed with LLM use in mind, its broad coverage applies only to AI systems through generalized principles of data collection, data security, fairness and transparency, accuracy and reliability, and accountability.

Unlike the EU, the U.S. currently does not have a comprehensive federal AI law dedicated to AI systems. This has led some states to create their own regulations. There is a growing patchwork of state-level AI laws, with over 20 states considering or having passed AI regulations. It is essentially an evolving race for official AI Governance.

Ultimately, responsibility for trustworthy AI lies in the shared responsibility of developers, security engineering teams, and leadership, who must proactively ensure their AI systems are reliable, secure, and ethical rather than waiting for government regulations, such as the EU AI Act, to enforce compliance.

Incorporate LLM security & governance

While we wait for formally defined governance standards for AI in various countries, what can we do in the meantime? The advice is simple: we should implement existing, established practices and controls. While GenAI adds a new dimension to cybersecurity, resilience, privacy, and compliance with legal and regulatory requirements, the best practices that have been around for a long time are still a sound way to address AI security.

AI asset inventory

It's important to recognize that an AI asset inventory should apply to both internally developed AND external or third-party AI solutions. As such, there is a clear need to catalog existing AI services, tools, and owners by designating a tag in asset management for specific AI inventory. Sysdig's approach is two-fold. Our AI workload security solution auto-identifies where AI packages are running in your environment. This insight, together with other signals such as runtime events, vulnerabilities, and misconfigurations, is used to surface AI risk.

Sysdig also helps organizations include AI components in the Software Bill of Materials (SBOM), allowing security teams to generate a comprehensive list of all the software components, dependencies, and metadata associated with their GenAI workloads. By identifying and cataloging AI data sources, security teams can better prioritize AI workloads based on their risk severity level.

Posture management

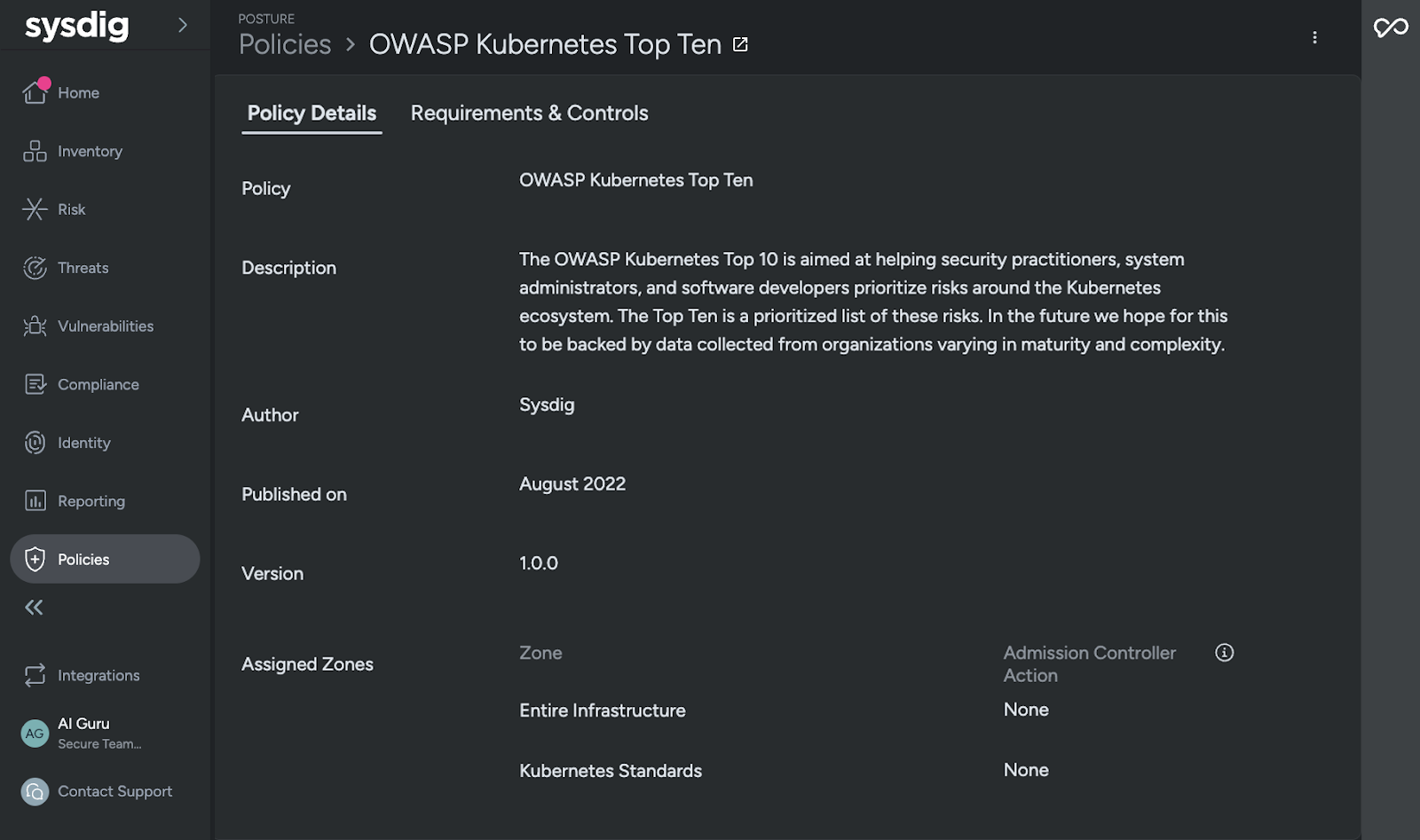

From a posture perspective, you should have a tool that appropriately reports on the OWASP Top 10 findings. With Sysdig, these reports are pre-packaged, eliminating the need for end-users to configure custom controls. This speeds up report generation while ensuring more accurate context.

Since a preponderance of LLM-based workloads run in Kubernetes, it remains as vital as ever to ensure adherence to the various security posture controls highlighted in the OWASP Top 10 for Kubernetes.

Furthermore, the coordination and mapping of a business's LLM security strategy to the MITRE ATLAS will also allow that same organization to better determine where its LLM security is covered by current processes, such as API Security Standards, and where additional security gaps may exist. MITRE ATLAS, which stands for "Adversarial Threat Landscape for Artificial Intelligence Systems," is a knowledge base powered by real-life examples of attacks on ML systems by known bad actors. Whereas OWASP Top 10 for LLMs can provide guidance on where to harden your proactive LLM security strategy, MITRE ATLAS findings can be aligned with your threat detection rules in Falco or Sysdig to better understand the Tactics, Techniques, & Procedures (TTPs) based on the well-known MITRE ATT&CK architecture.

Conclusion

Introducing LLM-based workloads into your cloud-native environment expands your business's existing attack surface. Naturally, as highlighted in the official release of the OWASP Top 10 for LLM Applications, this poses new challenges that require specialized tactics and defenses, such as those provided by frameworks like the MITRE ATLAS.

AI workloads running in Kubernetes will benefit from standard Kubernetes security best practices, including the established cybersecurity post-reporting procedures and the OWASP Top 10 mitigation strategies for Kubernetes. Integrating the OWASP Top 10 for LLMs into your existing cloud security controls, processes, and procedures will also help your business significantly reduce its exposure to evolving AI threats.

Want to learn more about GenAI security? Grab a copy of Securing AI: Navigating a New Frontier of Security Risk.