Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

Just as a software bill of materials (SBOM) helps expose hidden risks in traditional applications, an AI bill of materials (AIBOM) brings clarity to AI systems. An AIBOM documents the full AI infrastructure of an organization, from GPUs and containers to datasets and APIs, giving security leaders and their teams a roadmap to find and assess risks and enforce accountability.

A majority of organizations are racing to embrace AI through automating code generation, embedding models into customer service workflows, and expediting their marketing stunts and competitive advantage. Security teams are feeling the pressure with a dual mandate: add AI to security workflows and secure the AI that everyone else in the organization is being mandated to implement, too. And for this reason, as quickly as innovation moves, security is always working overtime to keep pace.

Introducing AIBOM: The infrastructure, risks, and how to secure AI models

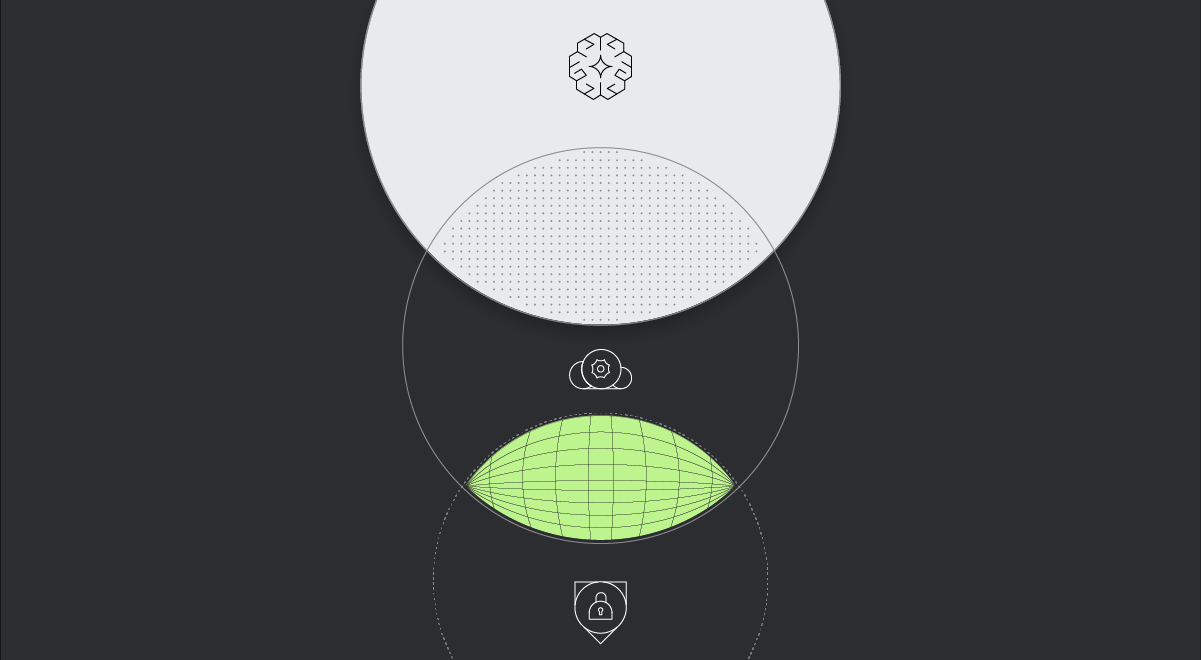

The truth is, AI doesn’t introduce new struggles. It reintroduces familiar security challenges in new places. AI is built on the same cloud-native infrastructure you already secure, like containerized base images and orchestration platforms. Sysdig’s new paper, AIBOM: The infrastructure, risks, and how to secure AI models, breaks down all of the components of an AI model and highlights how familiar cloud-native security controls (and risks) extend to AI workloads.

Watch our video:

And grab the guide to:

AI doesn’t need to be shrouded in mystery. With a detailed AIBOM, you can document what’s inside, identify where your risks live, and confidently secure your innovation. You’ve already secured containers, cloud workloads, and CI/CD pipelines; securing AI isn’t much different.

Get the full breakdown and see how you can apply what you already know to the world of AI.