What is MCP Server?

Model Context Protocol server is a software component that implements the Model Context Protocol and acts as an intermediary between AI models with external tools and data, which enables the AI models to conduct tasks and access information.

Anthropic introduced the Model Context Protocol (MCP) as an open standard protocol that serves as a connector, translating requests between AI systems and other services. Think of MCP as a secure bridge between large language models (LLMs) and external application APIs.

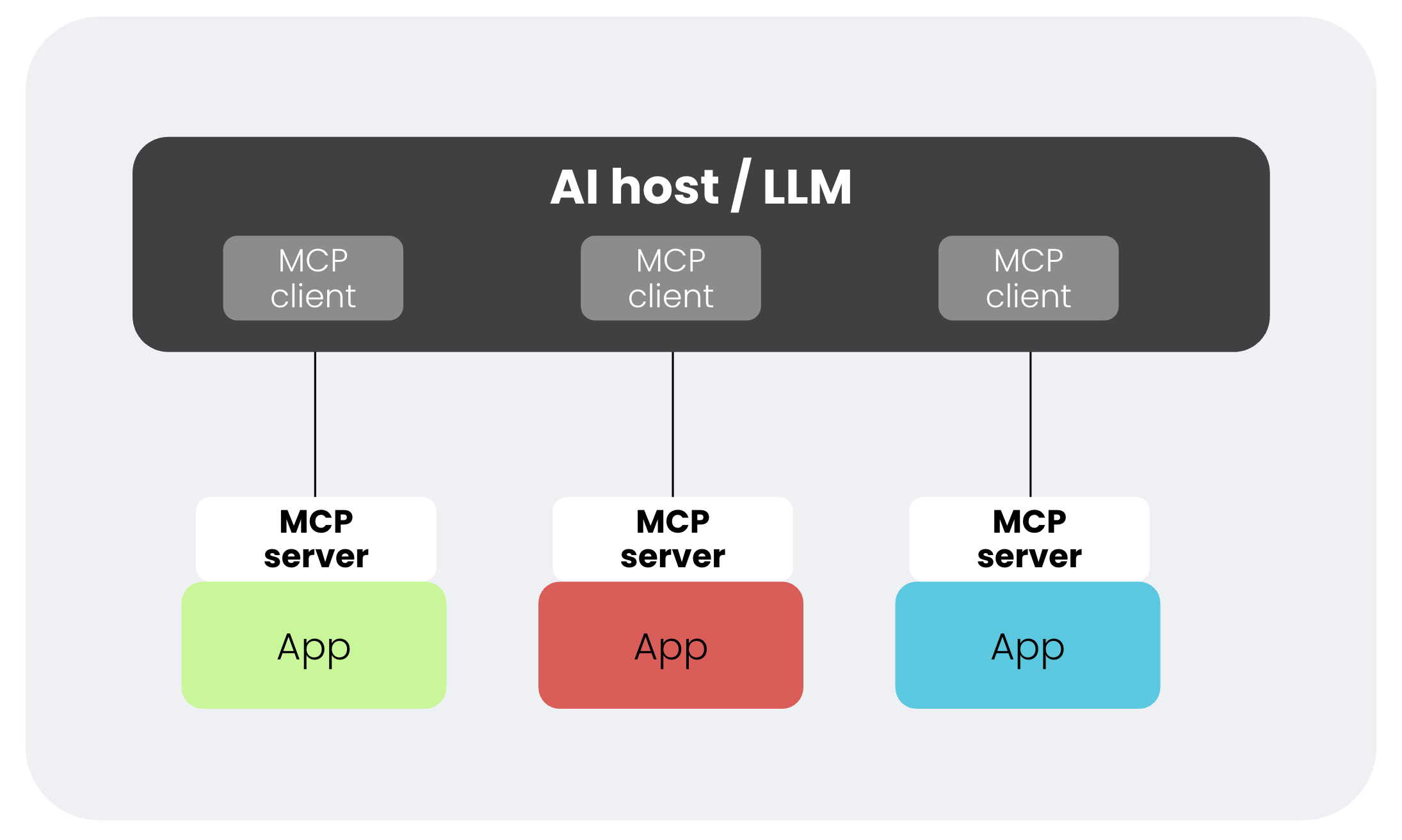

MCP provides a consistent framework that defines how AI models can discover, understand, and interact with data sources including databases, files systems, and SaaS applications like cloud security solutions. It follows a client-server architecture with two main components: MCP client and MCP server.

The rise of MCP server

Before Anthropic released MCP, querying and tasking AI agents was a struggle. If users wanted to connect data and applications to an LLM, they needed to create custom connectors for every external individual tool or data source. This meant a lot of time spent in developing custom code as well as updating that code whenever a new version of an LLM released.

So Anthropic introduced MCP to provide an open source standardized framework that enabled AI agent access to data and tools more easily. The AI company also released MCP server alongside MCP to provide a universal connector between AI agents and any tools and data sources.

Why MCP server is important

Before MCP server, organizations were limited to narrow use cases and its training data. The custom connectors created by organizations often didn’t scale up and continued to limit what AI agents could do.

With an MCP server, organizations can connect any LLM to specific data sources to enable access to the latest data, which helps strengthen what the AI agents can do. MCP simplifies communication between external data and tools and AI agents and allows for scaling up. No need to create custom connections between each data source or tool and the API.

How MCP works

At a high level, MCP consists of two core components:

- MCP client: The “client-side” component that resides with the AI application like Claude, ChatGPT, or Gemini, and is used to request connections to one or more MCP servers in order to access external information.

- MCP server: A standardized program that acts like a controlled gateway to enable tools or APIs to retrieve the data requested by the MCP client from a target system.

When the AI system wants information — say, the latest security alerts — it sends a structured request via an MCP client to the MCP server. The server validates the request, checks permissions, executes the necessary API calls, and returns the results in a format the model understands. This creates a secure, observable, and standardized loop between AI reasoning and enterprise data.

What are the capabilities of an MCP server?

An MCP server acts as a smart adapter between AI systems and real-world tools. It translates natural language requests — like “Get today’s sales report” — into the exact commands an app or API understands.

Some examples include connecting to file system servers for document access, database servers for data queries, and productivity apps like Slack. This opens up a wide range of interactions between AI hosts and external systems.

Some specific tasks include:

- A GitHub MCP server can turn “List my open pull requests” into a GitHub API query.

- A File MCP server can take “Save this summary as a text file” and write it locally.

- A Sysdig MCP server can return relevant cloud security data for requests like “list recent alerts” or “fetch critical vulnerabilities running in Kubernetes.”

Beyond executing requests, MCP servers also:

- Tell AI models what actions are available (tool discovery).

- Interpret and run commands securely.

- Format responses into AI-readable outputs.

- Handle errors gracefully and return meaningful feedback.

In short, MCP servers are the bridge that lets AI models interact safely and intelligently with external systems.

The Sysdig MCP Server

At Sysdig, we’ve built an MCP server that lets AI models securely access Sysdig’s Cloud Security APIs.

Through the Sysdig MCP Server, an AI assistant can:

- Query real-time security findings, vulnerabilities, and misconfigurations.

- Retrieve runtime threat detections and cloud posture data.

- Generate summaries or recommendations based on Sysdig SecureTM and Sysdig MonitorTM insights.

This means that LLMs — whether in ChatGPT, an internal AI platform, or another MCP-compatible system — can safely gain visibility into what’s happening across your cloud and container environments.

Because the Sysdig MCP Server follows the open MCP standard, it’s plug-and-play for any compatible AI client. There’s no need to expose credentials or write new integrations — everything happens securely within the protocol’s framework.

Conclusion

MCP server introduces a plug-and-play standard and opens a whole world of tools and data sources to your AI assistant. By introducing structure and control for accessing external systems, you can now incorporate valuable information and tasks via third-party data and tools into your AI queries without requiring fragile integrations or endless API work.

%201.svg)